Portal:Computer programming

Portal maintenance status: (September 2019)

|

The Computer Programming Portal

Computer programming or coding is the composition of sequences of instructions, called programs, that computers can follow to perform tasks. It involves designing and implementing algorithms, step-by-step specifications of procedures, by writing code in one or more programming languages. Programmers typically use high-level programming languages that are more easily intelligible to humans than machine code, which is directly executed by the central processing unit. Proficient programming usually requires expertise in several different subjects, including knowledge of the application domain, details of programming languages and generic code libraries, specialized algorithms, and formal logic.

Auxiliary tasks accompanying and related to programming include analyzing requirements, testing, debugging (investigating and fixing problems), implementation of build systems, and management of derived artifacts, such as programs' machine code. While these are sometimes considered programming, often the term software development is used for this larger overall process – with the terms programming, implementation, and coding reserved for the writing and editing of code per se. Sometimes software development is known as software engineering, especially when it employs formal methods or follows an engineering design process. (Full article...)

Selected articles -

-

Image 1

Image 1

Computer class at Chkalovski Village School No. 2 in 1985–1986

The history of computing in the Soviet Union began in the late 1940s, when the country began to develop its Small Electronic Calculating Machine (MESM) at the Kiev Institute of Electrotechnology in Feofaniya. Initial ideological opposition to cybernetics in the Soviet Union was overcome by a Khrushchev era policy that encouraged computer production.

By the early 1970s, the uncoordinated work of competing government ministries had left the Soviet computer industry in disarray. Due to lack of common standards for peripherals and lack of digital storage capacity the Soviet Union's technology significantly lagged behind the West's semiconductor industry. The Soviet government decided to abandon development of original computer designs and encouraged cloning of existing Western systems (e.g. the 1801 CPU series was scrapped in favor of the PDP-11 ISA by the early 1980s).

Soviet industry was unable to mass-produce computers to acceptable quality standards and locally manufactured copies of Western hardware were unreliable. As personal computers spread to industries and offices in the West, the Soviet Union's technological lag increased. (Full article...) -

Image 2COBOL (/ˈkoʊbɒl, -bɔːl/; an acronym for "common business-oriented language") is a compiled English-like computer programming language designed for business use. It is an imperative, procedural, and, since 2002, object-oriented language. COBOL is primarily used in business, finance, and administrative systems for companies and governments. COBOL is still widely used in applications deployed on mainframe computers, such as large-scale batch and transaction processing jobs. Many large financial institutions were developing new systems in the language as late as 2006, but most programming in COBOL today is purely to maintain existing applications. Programs are being moved to new platforms, rewritten in modern languages, or replaced with other software.

Image 2COBOL (/ˈkoʊbɒl, -bɔːl/; an acronym for "common business-oriented language") is a compiled English-like computer programming language designed for business use. It is an imperative, procedural, and, since 2002, object-oriented language. COBOL is primarily used in business, finance, and administrative systems for companies and governments. COBOL is still widely used in applications deployed on mainframe computers, such as large-scale batch and transaction processing jobs. Many large financial institutions were developing new systems in the language as late as 2006, but most programming in COBOL today is purely to maintain existing applications. Programs are being moved to new platforms, rewritten in modern languages, or replaced with other software.

COBOL was designed in 1959 by CODASYL and was partly based on the programming language FLOW-MATIC, designed by Grace Hopper. It was created as part of a U.S. Department of Defense effort to create a portable programming language for data processing. It was originally seen as a stopgap, but the Defense Department promptly pressured computer manufacturers to provide it, resulting in its widespread adoption. It was standardized in 1968 and has been revised five times. Expansions include support for structured and object-oriented programming. The current standard is ISO/IEC 1989:2023.

COBOL statements have prose syntax such asMOVE x TO y, which was designed to be self-documenting and highly readable. However, it is verbose and uses over 300 reserved words compared to the succinct and mathematically inspired syntax of other languages. (Full article...) -

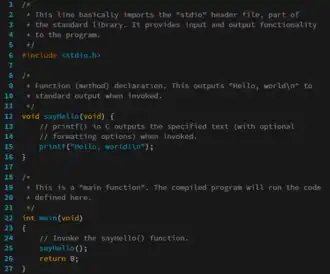

Image 3

Image 3

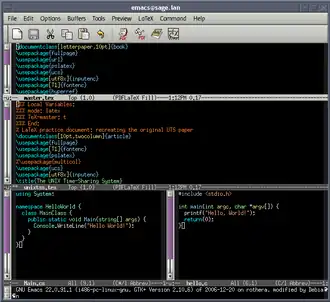

The source code for a computer program in C. The gray lines are comments that explain the program to humans. When compiled and run, it will give the output "Hello, world!".

A programming language is an artificial language for expressing computer programs.

Programming languages typically allow software to be written in a human readable manner.

Execution of a program requires an implementation. There are two main approaches for implementing a programming language – compilation, where programs are compiled ahead-of-time to machine code, and interpretation, where programs are directly executed. In addition to these two extremes, some implementations use hybrid approaches such as just-in-time compilation and bytecode interpreters. (Full article...) -

Image 4

Image 4.jpg)

Portion of the calculating machine with a printing mechanism of the analytical engine, built by Charles Babbage, as displayed at the Science Museum (London)

The analytical engine was a proposed digital mechanical general-purpose computer designed by the English mathematician and computer pioneer Charles Babbage. It was first described in 1837 as the successor to Babbage's difference engine, which was a design for a simpler mechanical calculator.

The analytical engine incorporated an arithmetic logic unit, control flow in the form of conditional branching and loops, and integrated memory, making it the first design for a general-purpose computer that could be described in modern terms as Turing-complete. In other words, the structure of the analytical engine was essentially the same as that which has dominated computer design in the electronic era. The analytical engine is one of the most successful achievements of Charles Babbage.

Babbage was never able to complete construction of any of his machines due to conflicts with his chief engineer and inadequate funding. It was not until 1941 that Konrad Zuse built the first general-purpose computer, Z3, more than a century after Babbage had proposed the pioneering analytical engine in 1837. (Full article...) -

Image 5In the C++ programming language,

Image 5In the C++ programming language,decltypeis a keyword used to query the type of an expression. Introduced in C++11, its primary intended use is in generic programming, where it is often difficult, or even impossible, to express types that depend on template parameters.

As generic programming techniques became increasingly popular throughout the 1990s, the need for a type-deduction mechanism was recognized. Many compiler vendors implemented their own versions of the operator, typically calledtypeof, and some portable implementations with limited functionality, based on existing language features were developed. In 2002, Bjarne Stroustrup proposed that a standardized version of the operator be added to the C++ language, and suggested the name "decltype", to reflect that the operator would yield the "declared type" of an expression.decltype's semantics were designed to cater to both generic library writers and novice programmers. In general, the deduced type matches the type of the object or function exactly as declared in the source code. Like thesizeofoperator,decltype's operand is not evaluated. (Full article...) -

Image 6

Image 6 Hamilton in 1995

Hamilton in 1995

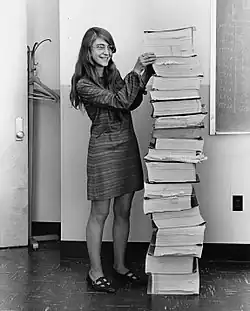

Margaret Elaine Hamilton (née Heafield; born August 17, 1936) is an American computer scientist. She directed the Software Engineering Division at the MIT Instrumentation Laboratory, where she led the development of the on-board flight software for NASA's Apollo Guidance Computer for the Apollo program. She later founded two software companies, Higher Order Software in 1976 and Hamilton Technologies in 1986, both in Cambridge, Massachusetts.

Hamilton has published more than 130 papers, proceedings, and reports, about sixty projects, and six major programs. She coined the term "software engineering", stating "I began to use the term 'software engineering' to distinguish it from hardware and other kinds of engineering, yet treat each type of engineering as part of the overall systems engineering process."

On November 22, 2016, Hamilton received the Presidential Medal of Freedom from president Barack Obama for her work leading to the development of on-board flight software for NASA's Apollo Moon missions. (Full article...) -

![Image 7 Ada is a structured, statically typed, imperative, and object-oriented high-level programming language, inspired by Pascal and other languages. It has built-in language support for design by contract (DbC), extremely strong typing, explicit concurrency, tasks, synchronous message passing, protected objects, and non-determinism. Ada improves code safety and maintainability by using the compiler to find errors in favor of runtime errors. Ada is an international technical standard, jointly defined by the International Organization for Standardization (ISO), and the International Electrotechnical Commission (IEC). As of May 2023[update], the standard, ISO/IEC 8652:2023, is called Ada 2022 informally. Ada was originally designed by a team led by French computer scientist Jean Ichbiah of Honeywell under contract to the United States Department of Defense (DoD) from 1977 to 1983 to supersede over 450 programming languages then used by the DoD. Ada was named after Ada Lovelace (1815–1852), who has been credited as the first computer programmer. (Full article...)](./_assets_/Blank.png) Image 7

Image 7

Ada is a structured, statically typed, imperative, and object-oriented high-level programming language, inspired by Pascal and other languages. It has built-in language support for design by contract (DbC), extremely strong typing, explicit concurrency, tasks, synchronous message passing, protected objects, and non-determinism. Ada improves code safety and maintainability by using the compiler to find errors in favor of runtime errors. Ada is an international technical standard, jointly defined by the International Organization for Standardization (ISO), and the International Electrotechnical Commission (IEC). As of May 2023, the standard, ISO/IEC 8652:2023, is called Ada 2022 informally.

Ada was originally designed by a team led by French computer scientist Jean Ichbiah of Honeywell under contract to the United States Department of Defense (DoD) from 1977 to 1983 to supersede over 450 programming languages then used by the DoD. Ada was named after Ada Lovelace (1815–1852), who has been credited as the first computer programmer. (Full article...) -

Image 8

Image 8 Screenshot of JavaScript source code

Screenshot of JavaScript source code

JavaScript (JS) is a programming language and core technology of the web platform, alongside HTML and CSS. Ninety-nine percent of websites on the World Wide Web use JavaScript on the client side for webpage behavior.

Web browsers have a dedicated JavaScript engine that executes the client code. These engines are also utilized in some servers and a variety of apps. The most popular runtime system for non-browser usage is Node.js.

JavaScript is a high-level, often just-in-time–compiled language that conforms to the ECMAScript standard. It has dynamic typing, prototype-based object-orientation, and first-class functions. It is multi-paradigm, supporting event-driven, functional, and imperative programming styles. It has application programming interfaces (APIs) for working with text, dates, regular expressions, standard data structures, and the Document Object Model (DOM). (Full article...) -

Image 9Prolog is a logic programming language that has its origins in artificial intelligence, automated theorem proving, and computational linguistics.

Image 9Prolog is a logic programming language that has its origins in artificial intelligence, automated theorem proving, and computational linguistics.

Prolog has its roots in first-order logic, a formal logic. Unlike many other programming languages, Prolog is intended primarily as a declarative programming language: the program is a set of facts and rules, which define relations. A computation is initiated by running a query over the program.

Prolog was one of the first logic programming languages and remains the most popular such language today, with several free and commercial implementations available. The language has been used for theorem proving, expert systems, term rewriting, type systems, and automated planning, as well as its original intended field of use, natural language processing. (Full article...) -

Image 10

Image 10

A computer lab contains a wide range of information technology elements,

including hardware, software and storage systems.

Information technology (IT) is the study or use of computers, telecommunication systems and other devices to create, process, store, retrieve and transmit information. While the term is commonly used to refer to computers and computer networks, it also encompasses other information distribution technologies such as television and telephones. Information technology is an application of computer science and computer engineering.

An information technology system (IT system) is generally an information system, a communications system, or, more specifically speaking, a computer system — including all hardware, software, and peripheral equipment — operated by a limited group of IT users, and an IT project usually refers to the commissioning and implementation of an IT system. IT systems play a vital role in facilitating efficient data management, enhancing communication networks, and supporting organizational processes across various industries. Successful IT projects require meticulous planning and ongoing maintenance to ensure optimal functionality and alignment with organizational objectives.

Although humans have been storing, retrieving, manipulating, analysing and communicating information since the earliest writing systems were developed, the term information technology in its modern sense first appeared in a 1958 article published in the Harvard Business Review; authors Harold J. Leavitt and Thomas L. Whisler commented that "the new technology does not yet have a single established name. We shall call it information technology (IT)." Their definition consists of three categories: techniques for processing, the application of statistical and mathematical methods to decision-making, and the simulation of higher-order thinking through computer programs. (Full article...) -

Image 11

Image 11.jpg)

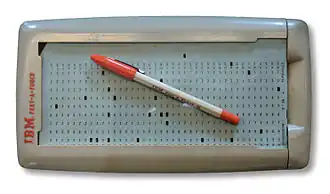

A 12-row/80-column IBM punched card from the mid-twentieth century

A punched card (also known as a punch card or Hollerith card) is a stiff paper-based medium used to store digital information through the presence or absence of holes in predefined positions. Developed from earlier uses in textile looms such as the Jacquard loom (1800s), the punched card was first widely implemented in data processing by Herman Hollerith for the 1890 United States Census. His innovations led to the formation of companies that eventually became IBM.

Punched cards became essential to business, scientific, and governmental data processing during the 20th century, especially in unit record machines and early digital computers. The most well-known format was the IBM 80-column card introduced in 1928, which became an industry standard. Cards were used for data input, storage, and software programming. Though rendered obsolete by magnetic media and terminals by the 1980s, punched cards influenced lasting conventions such as the 80-character line length in computing, and as of 2012, were still used in some voting machines to record votes. Today, they are remembered as icons of early automation and computing history. Their legacy persists in modern computing, notably influencing the 80-character line standard in command-line interfaces and programming environments. (Full article...) -

Image 12

Image 12

Python is a high-level, general-purpose programming language. Its design philosophy emphasizes code readability with the use of significant indentation.

Python is dynamically type-checked and garbage-collected. It supports multiple programming paradigms, including structured (particularly procedural), object-oriented and functional programming.

Guido van Rossum began working on Python in the late 1980s as a successor to the ABC programming language, currently supported are only versions in the 3.x series. Python 3.0, released in 2008, was a major revision not completely backward-compatible with earlier versions. Recent versions, such as Python 3.12, have added capabilites and keywords for typing (and more; e.g. increasing speed); helping with (optional) static typing. (Full article...) -

Image 13

Image 13 Logo

Logo

Swift is a high-level general-purpose, multi-paradigm, compiled programming language created by Chris Lattner in 2010 for Apple Inc. and maintained by the open-source community. Swift compiles to machine code and uses an LLVM-based compiler. Swift was first released in June 2014 and the Swift toolchain has shipped in Xcode since Xcode version 6, released in September 2014.

Apple intended Swift to support many core concepts associated with Objective-C, notably dynamic dispatch, widespread late binding, extensible programming, and similar features, but in a "safer" way, making it easier to catch software bugs; Swift has features addressing some common programming errors like null pointer dereferencing and provides syntactic sugar to help avoid the pyramid of doom. Swift supports the concept of protocol extensibility, an extensibility system that can be applied to types, structs and classes, which Apple promotes as a real change in programming paradigms they term "protocol-oriented programming" (similar to traits and type classes).

Swift was introduced at Apple's 2014 Worldwide Developers Conference (WWDC). It underwent an upgrade to version 1.2 during 2014 and a major upgrade to Swift 2 at WWDC 2015. It was initially a proprietary language, but version 2.2 was made open-source software under the Apache License 2.0 on December 3, 2015, for Apple's platforms and Linux. (Full article...) -

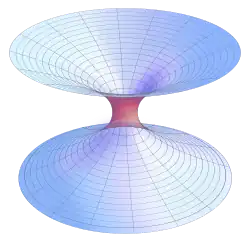

Image 14Artificial intelligence (AI) is the capability of computational systems to perform tasks typically associated with human intelligence, such as learning, reasoning, problem-solving, perception, and decision-making. It is a field of research in computer science that develops and studies methods and software that enable machines to perceive their environment and use learning and intelligence to take actions that maximize their chances of achieving defined goals.

Image 14Artificial intelligence (AI) is the capability of computational systems to perform tasks typically associated with human intelligence, such as learning, reasoning, problem-solving, perception, and decision-making. It is a field of research in computer science that develops and studies methods and software that enable machines to perceive their environment and use learning and intelligence to take actions that maximize their chances of achieving defined goals.

High-profile applications of AI include advanced web search engines (e.g., Google Search); recommendation systems (used by YouTube, Amazon, and Netflix); virtual assistants (e.g., Google Assistant, Siri, and Alexa); autonomous vehicles (e.g., Waymo); generative and creative tools (e.g., language models and AI art); and superhuman play and analysis in strategy games (e.g., chess and Go). However, many AI applications are not perceived as AI: "A lot of cutting edge AI has filtered into general applications, often without being called AI because once something becomes useful enough and common enough it's not labeled AI anymore."

Various subfields of AI research are centered around particular goals and the use of particular tools. The traditional goals of AI research include learning, reasoning, knowledge representation, planning, natural language processing, perception, and support for robotics. To reach these goals, AI researchers have adapted and integrated a wide range of techniques, including search and mathematical optimization, formal logic, artificial neural networks, and methods based on statistics, operations research, and economics. AI also draws upon psychology, linguistics, philosophy, neuroscience, and other fields. Some companies, such as OpenAI, Google DeepMind and Meta, aim to create artificial general intelligence (AGI)—AI that can complete virtually any cognitive task at least as well as a human. (Full article...) -

Image 15The Antikythera mechanism (/ˌæntɪkɪˈθɪərə/ AN-tik-ih-THEER-ə, US also /ˌæntaɪkɪˈ-/ AN-ty-kih-) is an ancient Greek hand-powered orrery (model of the Solar System). It is the oldest known example of an analogue computer. It could be used to predict astronomical positions and eclipses decades in advance. It could also be used to track the four-year cycle of athletic games similar to an olympiad, the cycle of the ancient Olympic Games.

Image 15The Antikythera mechanism (/ˌæntɪkɪˈθɪərə/ AN-tik-ih-THEER-ə, US also /ˌæntaɪkɪˈ-/ AN-ty-kih-) is an ancient Greek hand-powered orrery (model of the Solar System). It is the oldest known example of an analogue computer. It could be used to predict astronomical positions and eclipses decades in advance. It could also be used to track the four-year cycle of athletic games similar to an olympiad, the cycle of the ancient Olympic Games.

The artefact was among wreckage retrieved from a shipwreck off the coast of the Greek island Antikythera in 1901. In 1902, during a visit to the National Archaeological Museum in Athens, it was noticed by Greek politician Spyridon Stais as containing a gear, prompting the first study of the fragment by his cousin, Valerios Stais, the museum director. The device, housed in the remains of a wooden-framed case of (uncertain) overall size 34 cm × 18 cm × 9 cm (13.4 in × 7.1 in × 3.5 in), was found as one lump, later separated into three main fragments which are now divided into 82 separate fragments after conservation efforts. Four of these fragments contain gears, while inscriptions are found on many others. The largest gear is about 13 cm (5 in) in diameter and originally had 223 teeth. All these fragments of the mechanism are kept at the National Archaeological Museum, along with reconstructions and replicas, to demonstrate how it may have looked and worked.

In 2005, a team from Cardiff University led by Mike Edmunds used computer X-ray tomography and high resolution scanning to image inside fragments of the crust-encased mechanism and read the faintest inscriptions that once covered the outer casing. These scans suggest that the mechanism had 37 meshing bronze gears enabling it to follow the movements of the Moon and the Sun through the zodiac, to predict eclipses and to model the irregular orbit of the Moon, where the Moon's velocity is higher in its perigee than in its apogee. This motion was studied in the 2nd century BC by astronomer Hipparchus of Rhodes, and he may have been consulted in the machine's construction. There is speculation that a portion of the mechanism is missing and it calculated the positions of the five classical planets. The inscriptions were further deciphered in 2016, revealing numbers connected with the synodic cycles of Venus and Saturn. (Full article...)

Selected images

-

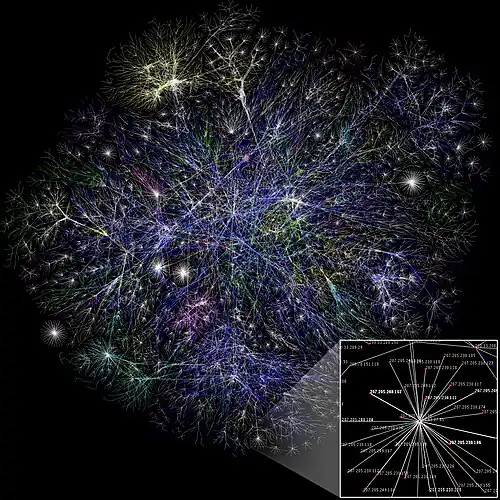

Image 1Partial map of the Internet based on the January 15, 2005 data found on opte.org. Each line is drawn between two nodes, representing two IP addresses. The length of the lines are indicative of the delay between those two nodes. This graph represents less than 30% of the Class C networks reachable by the data collection program in early 2005.

Image 1Partial map of the Internet based on the January 15, 2005 data found on opte.org. Each line is drawn between two nodes, representing two IP addresses. The length of the lines are indicative of the delay between those two nodes. This graph represents less than 30% of the Class C networks reachable by the data collection program in early 2005. -

Image 2Margaret Hamilton standing next to the navigation software that she and her MIT team produced for the Apollo Project.

Image 2Margaret Hamilton standing next to the navigation software that she and her MIT team produced for the Apollo Project. -

_in_Dark_theme.png) Image 3GNOME Shell, GNOME Clocks, Evince, gThumb and GNOME Files at version 3.30, in a dark theme

Image 3GNOME Shell, GNOME Clocks, Evince, gThumb and GNOME Files at version 3.30, in a dark theme -

Image 4Grace Hopper at the UNIVAC keyboard, c. 1960. Grace Brewster Murray: American mathematician and rear admiral in the U.S. Navy who was a pioneer in developing computer technology, helping to devise UNIVAC I. the first commercial electronic computer, and naval applications for COBOL (common-business-oriented language).

Image 4Grace Hopper at the UNIVAC keyboard, c. 1960. Grace Brewster Murray: American mathematician and rear admiral in the U.S. Navy who was a pioneer in developing computer technology, helping to devise UNIVAC I. the first commercial electronic computer, and naval applications for COBOL (common-business-oriented language). -

Image 5An IBM Port-A-Punch punched card

Image 5An IBM Port-A-Punch punched card -

Image 6Output from a (linearised) shallow water equation model of water in a bathtub. The water experiences 5 splashes which generate surface gravity waves that propagate away from the splash locations and reflect off of the bathtub walls.

Image 6Output from a (linearised) shallow water equation model of water in a bathtub. The water experiences 5 splashes which generate surface gravity waves that propagate away from the splash locations and reflect off of the bathtub walls. -

Image 7A lone house. An image made using Blender 3D.

Image 7A lone house. An image made using Blender 3D. -

-

Image 9Partial view of the Mandelbrot set. Step 1 of a zoom sequence: Gap between the "head" and the "body" also called the "seahorse valley".

Image 9Partial view of the Mandelbrot set. Step 1 of a zoom sequence: Gap between the "head" and the "body" also called the "seahorse valley". -

Image 10Stephen Wolfram is a British-American computer scientist, physicist, and businessman. He is known for his work in computer science, mathematics, and in theoretical physics.

Image 10Stephen Wolfram is a British-American computer scientist, physicist, and businessman. He is known for his work in computer science, mathematics, and in theoretical physics. -

Image 11This image (when viewed in full size, 1000 pixels wide) contains 1 million pixels, each of a different color.

Image 11This image (when viewed in full size, 1000 pixels wide) contains 1 million pixels, each of a different color. -

Image 12Ada Lovelace was an English mathematician and writer, chiefly known for her work on Charles Babbage's proposed mechanical general-purpose computer, the Analytical Engine. She was the first to recognize that the machine had applications beyond pure calculation, and to have published the first algorithm intended to be carried out by such a machine. As a result, she is often regarded as the first computer programmer.

Image 12Ada Lovelace was an English mathematician and writer, chiefly known for her work on Charles Babbage's proposed mechanical general-purpose computer, the Analytical Engine. She was the first to recognize that the machine had applications beyond pure calculation, and to have published the first algorithm intended to be carried out by such a machine. As a result, she is often regarded as the first computer programmer. -

-

Image 14A view of the GNU nano Text editor version 6.0

Image 14A view of the GNU nano Text editor version 6.0 -

-

Image 16Deep Blue was a chess-playing expert system run on a unique purpose-built IBM supercomputer. It was the first computer to win a game, and the first to win a match, against a reigning world champion under regular time controls. Photo taken at the Computer History Museum.

Image 16Deep Blue was a chess-playing expert system run on a unique purpose-built IBM supercomputer. It was the first computer to win a game, and the first to win a match, against a reigning world champion under regular time controls. Photo taken at the Computer History Museum. -

-

Image 18A head crash on a modern hard disk drive

Image 18A head crash on a modern hard disk drive

Did you know? -

- ... that Cornell University's student-oriented programming language dialect was made available to other universities but required a "research grant" payment in exchange?

- ... that the programming language Acorn System BASIC was so non-standard that one commenter suggested that using it on the BBC Micro would be a disaster?

- ... that the Gale–Shapley algorithm was used to assign medical students to residencies long before its publication by Gale and Shapley?

- ... that the 2024 psychological horror game Mouthwashing utilises non-diegetic scene transitions that mimic glitches and crashes?

- ... that it took a particle accelerator and machine-learning algorithms to extract the charred text of PHerc. Paris. 4 without unrolling it?

- ... that Phil Fletcher as Hacker T. Dog caused Lauren Layfield to make the "most famous snort" in the United Kingdom in 2016?

Subcategories

WikiProjects

![]()

- There are many users interested in computer programming, join them.

- WikiProject Computing

- WikiProject Computer science

- WikiProject C/C++

- WikiProject Java

- WikiProject Cryptography

- WikiProject Software

Computer programming news

No recent news

Topics

|

Related portals

Associated Wikimedia

The following Wikimedia Foundation sister projects provide more on this subject:

-

Commons

Free media repository -

Wikibooks

Free textbooks and manuals -

Wikidata

Free knowledge base -

Wikinews

Free-content news -

Wikiquote

Collection of quotations -

Wikisource

Free-content library -

Wikiversity

Free learning tools -

Wiktionary

Dictionary and thesaurus

-

List of all portals

List of all portals -

-

-

-

-

-

-

-

-

-

Random portal

Random portal -

WikiProject Portals

WikiProject Portals